The all data revolution

Big Data is neither a fad nor a panacea.

-

Date

April 2019

-

Area of expertiseResearch and Evidence (R&E)

-

KeywordsData Innovation , Evidence use , Qualitative Data Collection , Data collection

-

OfficeOPM United Kingdom

“By using these [big] data to build a predictive, computational theory of human behaviour we can hope to engineer better social systems.”

This bold claim made by the social physics team at MIT media lab sums up over a decade of exuberant enthusiasm for the promises of Big Data. It has also spread to development work, where Big Data is sometimes referred to as being crucial for addressing development issues of all scales, including measuring and achieving the Sustainable Development Goals.

Big Data essentially refers to a collection of large volumes of new data. It’s often defined by the 3Vs: data of very large volume; produced at high velocity; and of high variety. The data revolution, in turn, is the explosion in the availability of Big Data. However, despite the buzz, the data revolution has yet to deliver on its promises. Is the data revolution a big fad?

Widening the lens

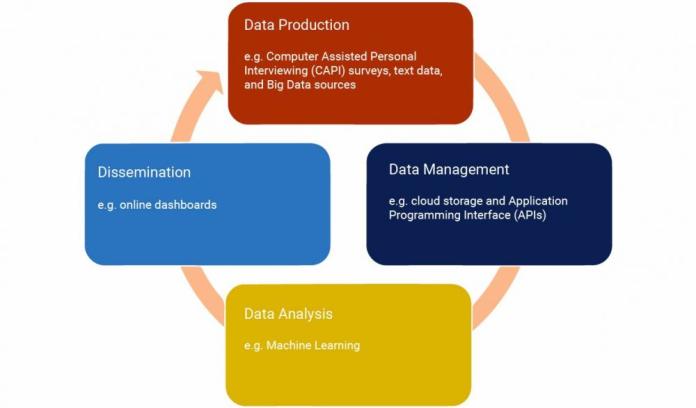

Not at all. The way in which data is used is undergoing fundamental changes in development work and these changes go beyond the size of the data. What we are witnessing is an ‘all data revolution’ rather than a Big Data revolution, which affects all stages of the data cycle. It is driving transformative innovations not only in the type of data collected and used, which includes Big Data, but also how it is managed and shared, how it is analysed, and how results of these analyses are disseminated. Focussing on Big Data alone means missing out on some of the most exciting parts of this revolution.

Data production: digitilisation in the field

Undeniably, the data sources and what can be conceived as ‘analysable data’ are changing. Big datasets are becoming available for the purposes of public policy analysis and research, driven mainly by digitalisation and the omnipresent internet. However, it is important to note that Big Data is not a like for like replacement for traditional data sources. Surveys continue to be relevant. In fact, it is often conventional survey data and statistical theory that builds the Big Data algorithms and validates the outputs.

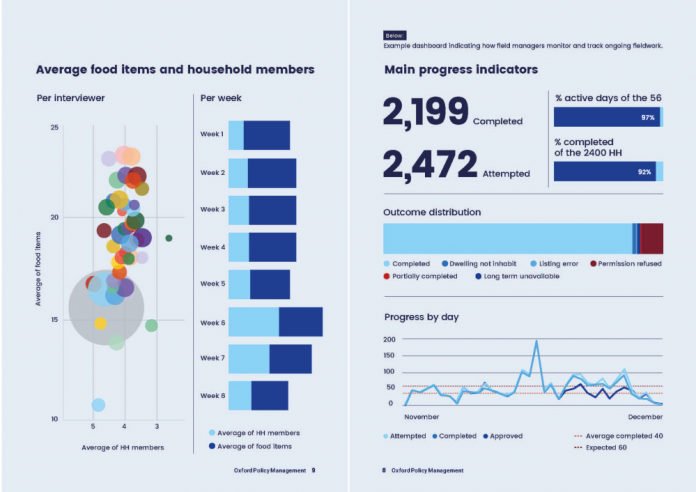

The data revolution is also affecting the way in which such surveys are being implemented. Collecting survey data now often involves using wireless tablets. This has significant implications for what data collection looks like: instantly shareable survey data and metadata (e.g. on the length of interviews) can be used for real-time quality assurance. Online dashboards can be programmed to load the latest data from servers automatically and can provide shareable, interactive visualisations of a survey’s progress. GPS systems can be used to geo-reference data, photography can be used to augment questionnaires with imagery data, and voice-recording can be used to collect sound-bites as part of the interview process. Much of this can be deployed to standard smartphones and tablets, which allows creative solutions for collecting data in the field, especially in hard-to-reach places and communities. An excellent example of this is Girl Effect’s TEGA platform. Remote surveying using mobile phones and SMS messaging is increasingly being employed, and the extent to which the data collection toolbox is evolving is evident.

Data analysis: the rise of the machine

Accompanying these changes in data production is a fundamental shift in how data can be analysed. This now involves employing a set of techniques that are very different from what was common 10 to 15 years ago. International development practitioners are adopting insights from computer science and modern data science more broadly, most prominently, this refers to the rise of machine learning techniques. The key innovation has been to make use of exponentially increased computational capacity to estimate relationships in data using analytical techniques that were previously too costly to implement. These techniques have been astonishingly successful, as they combine a wide variety of different types of data into the same analytical model, for example combining surveys data, geological information, and different sets of satellite data. Two examples include using mobile phone data to predict poverty and predicting infectious disease outbreaks. These techniques have also allowed for the analyses of data coming from the ‘data exhaust’, the huge amounts of messy data being produced by online activities and the Internet of Things, which traditional methods would have been overwhelmed by.

Importantly, these methods are also influencing traditional statistical and economic research approaches that are not only applicable to Big Data. For example, Hal Varian, Professor of Economics at University of California Berkeley, was an early proponent for economics students to “go to the computer science department and take a class in machine learning”. There is now a stream of research on how these new methods can be combined with traditional approaches. This means that anyone’s work with data will be affected by these shifts in data analysis techniques.

Dissemination: speed, interactivity, and sharing

While perhaps less prominent, innovations on the dissemination side of the data cycle are similarly profound as in other areas. There are three main characteristics of these innovations. First, as web-based solutions exist in the three previous steps in the data cycle and can be seamlessly integrated, the speed of analysis and dissemination has significantly increased. In the past, implementing a survey, analysing the data, and writing a report could take months or even years to reach policymakers. Now, if data is automatically or regularly produced and stored on an online system, software can analyse the data automatically. Results can be available to the policymaker almost instantly. Second, dashboard dissemination solutions now allow for interactivity where previously reports supplied a fixed set of analyses. Digital dashboards now allow clients to access, interact and dig deeper into the data, looking at the analyses from different perspectives within the same organisation.

Third, the format in which results are shared, be it interactive or not, is also changing, making those results available to a wider audience. Whereas reports previously might only have been available in print or as PDFs to be requested from research institutions, they can now be web-based and can be accessed with any computer, tablet, or smartphone. Additionally, classical statistical reporting can be integrated with other types of multi-media, such as videos or sound-bites. These trends have given rise to an entirely new field of work – data journalism – and it is changing the way in which users of statistical information interact with data. Importantly, it is making more information more accessible to more people, helping widen civil society engagement and the potential for holding policymakers to account for the impacts of their decisions.

Managing the change

Taken as a whole, this ‘all data revolution’ has significant implications for a wide variety of actors who interact with data. Donors and international organisations rely on data to efficiently target their aid and assistance. Governments and citizens consume data to guide policies and hold officials to account. In particular, given that innovations are appearing everywhere in the data cycle, it represents a sea change for the work of institutions currently operating across this cycle – national statistics offices for example.

Consequently, opportunities abound. On the one hand, it has never been easier to gather, analyse, and disseminate data. The drive towards quantification – what sociologist Steffen Mau calls the ‘metric society’ – means data on almost anything can be accessed with relative ease and immediacy, and in large volumes. At the Paris21 Forum representatives from different national statistics offices and international organisations met to discuss the opportunities that this ‘new data ecosystem’ creates for national statistical systems.

On the other hand, changes to the data cycle give rise to a whole new set of challenges and risks: data quality concerns, ethical concerns about the rise of artificial intelligence, data privacy issues, and biases driven by machine learning techniques, to name a few.

Perhaps one of the main barriers to the realisation of the potential held by this ‘all data revolution’, though, is a capacity one, particularly in lower-income countries. Governments can’t simply rely on a pool of traditionally trained statisticians to deal with this – the changes in the data cycle mean that traditional techniques are no longer sufficient for capturing the full potential of data for policymaking. Properly trained data scientists are necessary, with the technical capacity to deal with changes throughout the data cycle.

We are seeing changes to redress the gap: websites are becoming savvier, cloud servers are replacing traditional storage mechanisms, and services are changing the way they analyse data. National Statistics Offices are also changing the way in which they are hiring talent and are embracing collaborations with new, emerging data actors. The danger, however, is that this becomes a game of catch-up, especially in lower and middle-income countries. The extent to which the opportunities presented by the data revolution can be leveraged to foster growth and reduce poverty will depend on careful, sensitive management by both development practitioners and policymakers at an international level.

When Big Data first emerged as large datasets brought about by digitalisation, these were seen to hold great promise and be an answer to many global problems. However, a more nuanced picture of this trend is now emerging. The term ‘Big Data Hubris’ was coined by an article in Science from 2014, defined as “the often implicit assumption that big data are a substitute for, rather than supplement to, traditional data collection and analysis”. Clearly, this hubris needs to be avoided. However, while these initial silver bullet expectations of Big Data have not been met, taken together, innovations across the data cycle certainly amount to a revolution. This has powerful implications for international development in many different areas and evidence-based policymaking will be more accessible than ever.

Paul Jasper is a senior consultant and data innovation lead in the Quantitative Data Analysis team at OPM. He is an economist and econometrician with extensive experience in designing, implementing, and managing quantitative impact assessments and data analytics projects along the entire data cycle (production, wrangling, analysis, dissemination).

Paul’s recent work includes analysis of large household surveys and designing and implementing quantitative impact evaluations in a variety of thematic areas using econometric and modern analytical methods. He has also been involved in designing M&E systems for large multi-year public programmes, focusing on the use of rigorous quantitative evidence for M&E and Learning purposes. He is interested in methodological advances related to quantitative impact evaluations and applications of data science approaches to public policy issues.