When are large-scale surveys on education useful?

Large-scale surveys are time-consuming and expensive - but often vital. How do we know when to do them?

-

Date

May 2018

-

Area of expertiseResearch and Evidence (R&E)

-

KeywordMonitoring, Evaluation, and Learning (MEL)

Large-scale surveys on education take up valuable student, teacher, and headteacher time. They are also expensive. So they should be used judiciously with a keen eye to their value. How do we know when a survey like this will prove useful?

The World Bank and the UK’s Department for International Development have in recent flagship education publications underscored the importance of robust data on education. At Oxford Policy Management, we have conducted scores of large-scale surveys on education in recent years. This post sets out our reflections on how to make them worthwhile.

Start with clarity of purpose

Large-scale surveys on education can serve several important purposes. Those commissioning and conducting surveys should be very clear which purpose they are serving. Without this clarity, resources can be misdirected or opportunities missed – for instance if a control group is not established at baseline for a survey seeking to evaluate impact. In order to establish the purpose of a survey, it helps to see how data can support education systems at different stages and with different key actors.

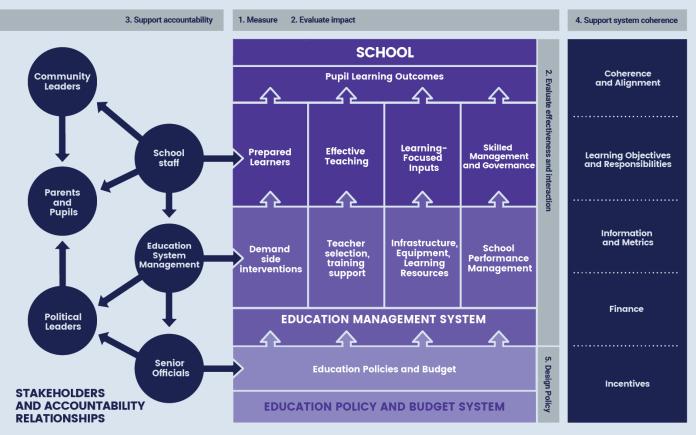

The figure below offers a way to think about this. At its centre is a stylised model of the inputs to an education system that runs from education policies and budget to create learning in students. The circles on the left hand side represent the key actors at each level of the system. The block to the right sets out the features of a system that need to be coherent for the system to perform well. The numbered grey boxes offer examples of where robust data can support education systems. Large-scale surveys can play a key role in all of these.

Choosing the correct measurements is vital

Measurements of learning outcomes are crucial to identify whether the education system is working as it should and to build partnerships in support of reform. Large-scale learning assessments by citizens, governments, or partners offer representative measures of key education outcomes that focus debate on how to improve. To be most useful, these assessments need to

< >be technically strong enough to stand up to rigorous scrutiny;

be comparable over time to track progress;

offer representative data on key student groups (nationwide, regionally, and of marginalised groups); and

measure the learning outcomes the country values, which is probably not just coverage of the school curriculum, but also real world applications of literacy, numeracy, and science, as well as ‘21st-century skills’.surveys that track spending. These measurements focus policy attention on what really matters. For example, ASER has focused education policy debates in India on low levels of learning. Robust measurements can also be used to reward performance or disburse funds. It is far more difficult for qualitative or anecdotal measures to do this because they are more easily contestable.

Surveys need to be undertaken at the right stage

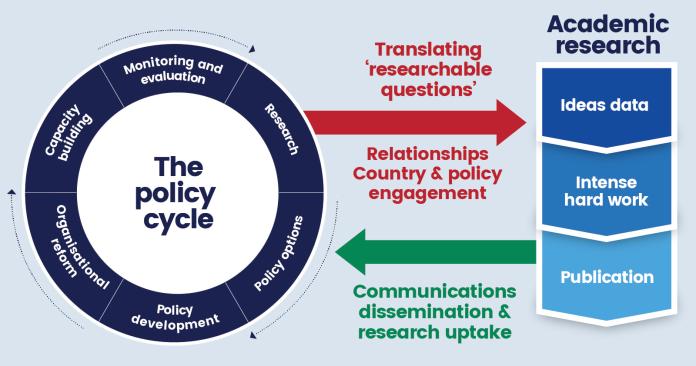

Large-scale surveys can have a key role in informing policy design. For instance, surveys that enumerate and analyse the number of children out of school sharpen policies to improve attendance and enrolment. Discrete choice experiments that generate statistically representative information on what different groups want to be paid to work in rural areas can improve the efficiency with which teacher salaries are set. And even more academic study can inform policy debates through new ideas for improving education outcomes. But to be useful, these surveys need to take place at the right time and with the active involvement of policymakers who are going to use them.

Large-scale surveys can also be used to evaluate the impact or effectiveness of interventions to improve parts of the education system, particularly if they are long-term and large-scale. But large-scale surveys are not always required or appropriate to generate robust evaluation findings, and if they are required, they do not always need to measure learning outcomes. And unlike some health interventions, education interventions typically take many years to improve outcomes. This requires policymakers and researchers to be patient with these surveys, and it not always easy to judge.

When is a large-scale survey necessary to evaluate an intervention?

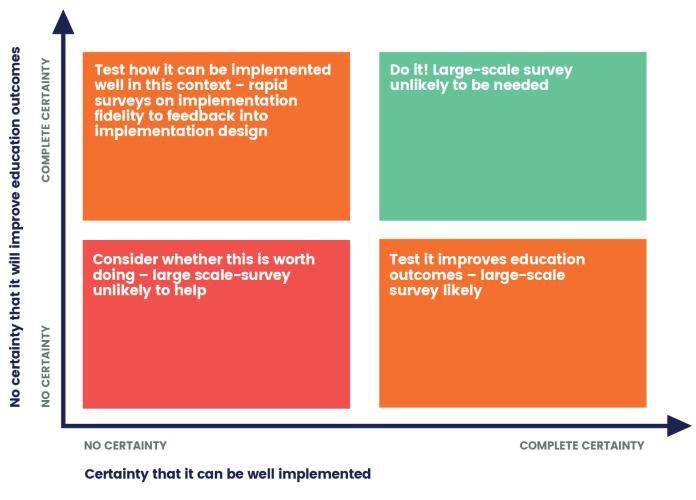

Broadly speaking, there are two factors to consider when looking at whether or not an education intervention would benefit from a large-scale survey. These are the likelihood that an intervention will improve learning outcomes (if implemented well), and the likelihood that the intervention can be implemented well in a given context. These are not absolutes, of course, but can be thought of as spectrums on two axes of a graph.

Start at the bottom right. Broadly spoken, where an education intervention is possible to implement but unproven in a given context (i.e. the implementers are unsure whether it will, if well implemented, lead to a desirable change in education outcomes such as learning or teaching or attendance), there is a good case for an evaluation that assesses this robustly as a proof of concept. For complex interventions, a theory-based evaluation will be usually be sensible. Robust measurement will likely involve a large-scale survey of education outcomes, and sometimes a randomised control trial. Without this, public money could go to waste in an intervention that is simply not doing what is needed.

Now cross to the top left, B. Where there is a good reason to think that an intervention would lead in a given context to an improvement in education outcomes (because it has already been tried and measured), then a large-scale survey on outcomes may not be required, and instead smaller, more rapid measures of implementation fidelity (i.e. is it working as intended?) will be valuable. These can be undertaken quickly, can cope with changes to implementation approaches, and can feed back results to implementers at high speed to inform changes to approach and delivery.

In some (rare) circumstances, there will be interventions that can clearly be done and work – in those cases large-scale surveys are unlikely to be useful.

In between these poles, life is more complicated. The most appropriate action in the bottom left of the diagram is probably further diagnostic work. As we learn more how an intervention can work in a given context, there will be more questions about whether or not it improves the outcomes we care about: not just the ‘intermediate’ outcomes, such as teacher effectiveness, but the final outcome of interest - that is, whether students are learning. But until the top right corner of the map is reached, there is likely a role for large-scale surveys of some sort.

Take great care with the quality of implementation

Where there is a clear purpose and rationale for a large-scale survey, it is vital to pay close attention to implementing it in a robust way. Instruments and fieldwork approaches need careful field testing and validation. Surveys, particularly for evaluations, can be fragile. They are often vulnerable to the availability of good quality and clear data for sampling frames, movements of teachers or students or closures of schools (especially if they are tracking panels), natural or manmade disasters, or changes to implementation approaches. Having high quality teams running the survey and being responsive to these challenges is important, but so is strong contingency planning and anticipation though detailed manuals and training, real-time data using computer-assisted interviewing and strong quality control protocols, and flexible teams that can share roles and cope with the need to make fast changes. Making sure that some team members work through the survey cycle (design, fieldwork, analysis, and reporting) usually strengthens quality.

Seek ways to involve decision makers in surveys

This has to be achieved without compromising decision makers’ independence or day jobs. Large-scale surveys are often hard to understand. The practical experience of doing a large-scale survey also offers fantastic opportunities to learn about an education system: visiting hundreds of schools, talking to thousands of students. These two points suggest involving decision makers in surveys.

This involvement can go beyond participation in workshops to discuss the objectives and results. As far as possible without affecting confidentiality or data quality, officials should join training sessions and field teams so that they experience and own the results first hand. This can, in addition, contribute to building government monitoring systems and culture. Finding the right level of stakeholder involvement – not just engagement – in a survey can lead to lasting improvements in education systems, over and above the survey itself.

We believe that large-scale surveys can play a crucial role in building better education systems. We hope our experience can help commissioners and implementers of large-scale surveys ensure that they do play this role.

This blog is based on a series of internal seminars held by Oxford Policy Management’s education team reflecting on our large-scale education surveys in Zanzibar, Tanzania, Nigeria, and Sierra Leone. While the views expressed remain those of the author, many thanks are due to the presenters (Lindsay Roots, Zara Majeed, Georgina Rawle, Nicola Ruddle, Sourovi De, Stuart Cameron, and Florian Friedrich) and all the participants in these seminars. In particular Tom Pellens, Sourovi De, Oladele Akogun, and Steve Packer gave helpful comments on a first draft.